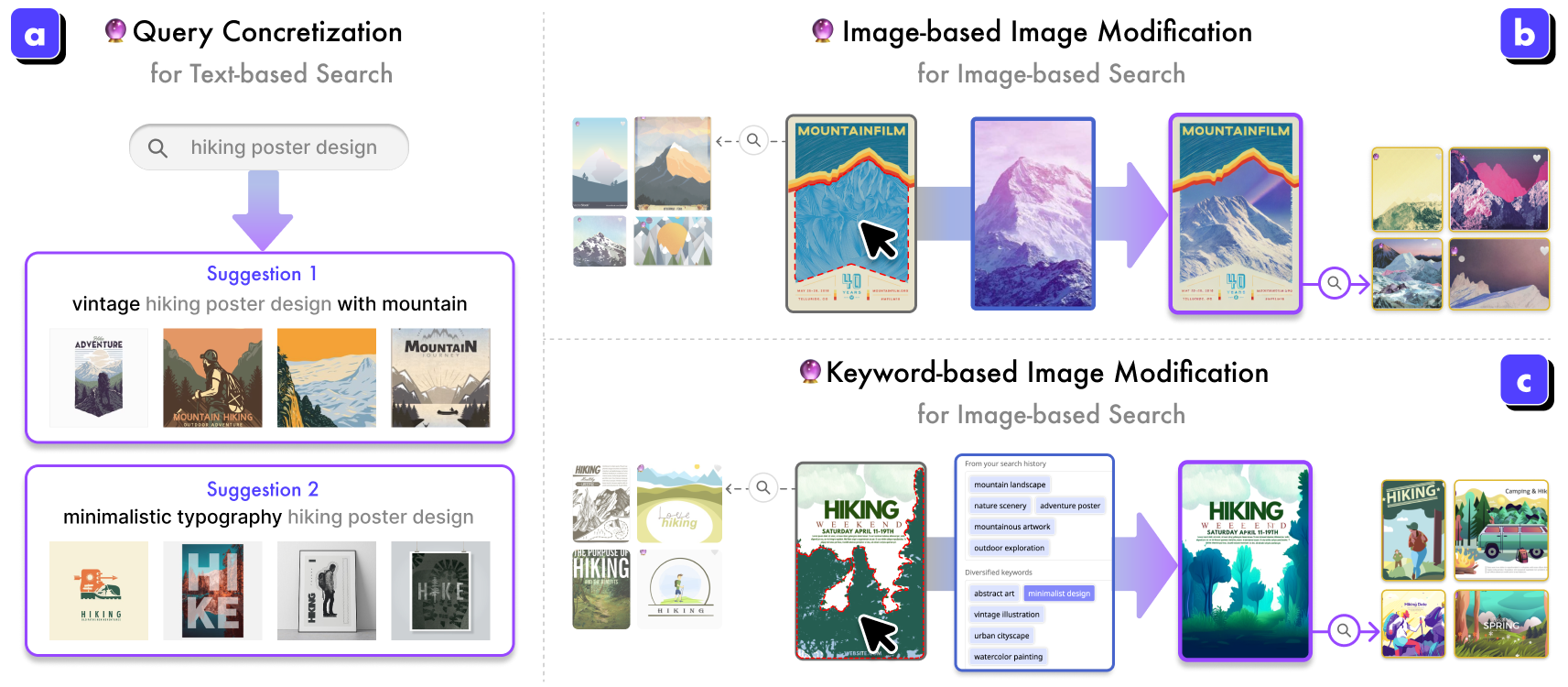

🔮 GenQuery is an interactive visual search system that allows the users to concretize the abstract text query [a], express search intent through visuals [b], and diversify their search intent based on the search history [c]. Through the three main features, users can express their visual search intent easily and accurately and explore a more diverse and creative visual search space.

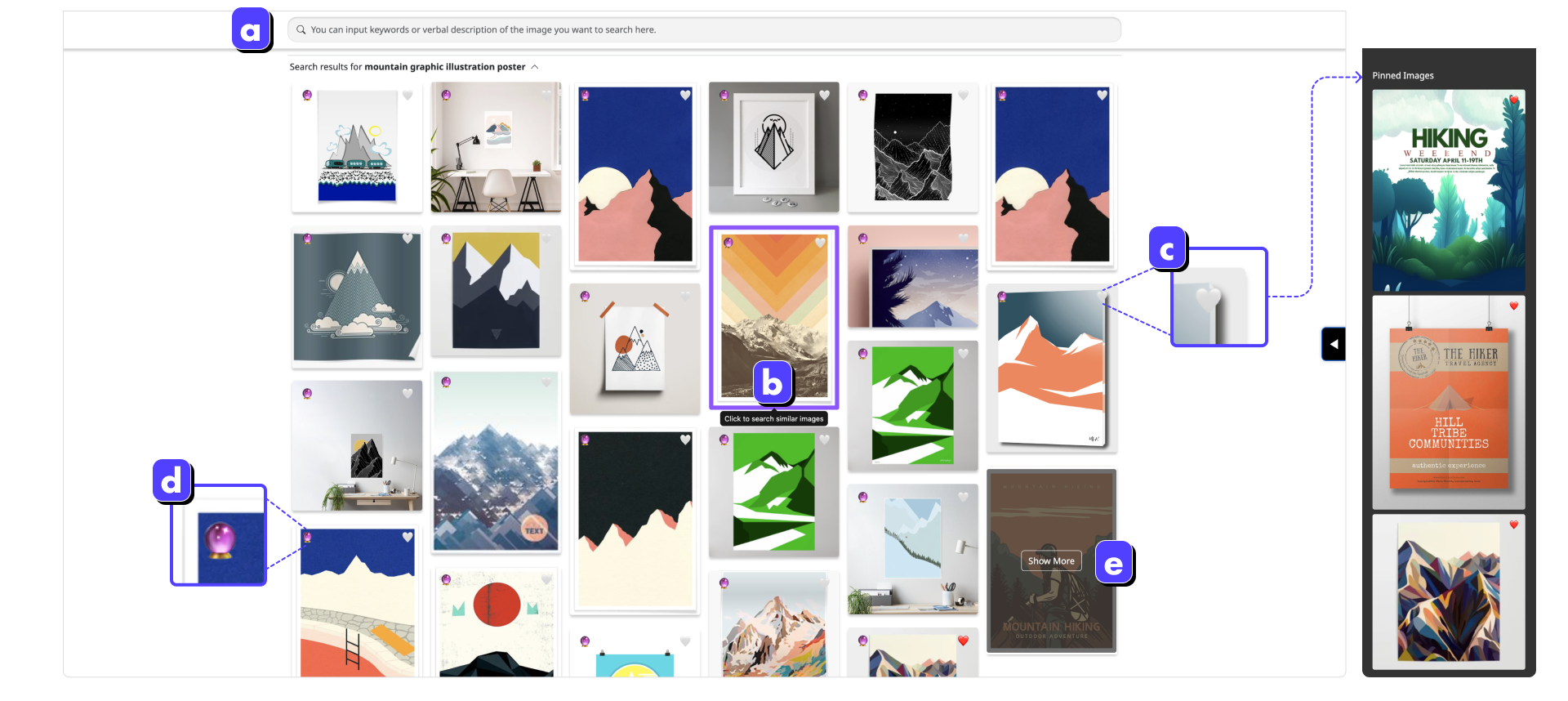

🔮 GenQuery provides a similar interface to popular visual search tools like Pinterest. The system provides a similar interface to popular visual search tools like Pinterest. Users can input a text query [a] (text-based search) or click an image in the search results [b] (image-based search) to find the visual images they want to see and save the desired images [c].

Beyond these basic features, GenQuery supports Query Concretization when the user writes a query in [a]. Furthermore, when the user clicks [d], it also supports Image-based Image Modification and Keyword-based Image Modification to allow the user to find more intent-aligned or diversified images.

Our formative study findings reveal that the visual search process is inefficient due to the user’s vague text query at the initial text-based search. To address this, we propose a Query Concretization interaction using LLM prompting in the visual search process.

When users had concrete target images they wanted to look for in their minds, they wanted to use image modality to edit the following search queries in the image-based search. To support the user in finding a more intent-aligned search result, we propose Image-based Image Modification for the following visual search query.

One of the essential aspects of visual search is finding diversified and unexpected ideas as well as searching intent-aligned images. Our formative findings identified that the users tried to use text modality when they wanted to see more diversified and different images. Thus, we propose Text-based Image Modification interaction to help the divergent visual search phase.

The three interactions of 🔮 GenQuery allowed the user to express their intent intuitively and accurately so that the users find more satisfied, diversified, and creative ideas. If you want to see more details of the findings of our user study, please check our paper!

@inproceedings{son2023genquery,

title={GenQuery: Supporting Expressive Visual Search with Generative Models},

author={Kihoon Son and DaEun Choi and Tae Soo Kim and Young-Ho Kim and Juho Kim},

year={2023},

eprint={2310.01287},

archivePrefix={arXiv},

primaryClass={cs.HC}

}

This research was supported by the KAIST-NAVER Hypercreative AI Center.